Wikipedia:Wikipedia Signpost/2019-10-31/Recent research

Research at Wikimania 2019: More communication doesn't make editors more productive; Tor users doing good work; harmful content rare on English Wikipedia

A monthly overview of recent academic research about Wikipedia and other Wikimedia projects, also published as the Wikimedia Research Newsletter.

Research presentations at Wikimania 2019

This year's Wikimania community conference in Stockholm, Sweden featured a well-attended Research Space, a 2.5-days track of presentations, tutorials, and lightning talks. Among them:

"All Talk: How Increasing Interpersonal Communication on Wikis May Not Enhance Productivity"

Enabling "easier direct messaging [on a wiki] increases... messaging. No change to article production. Newcomers may make fewer contributions", according to this presentation of an upcoming paper studying the effect of a "message walls" feature on Wikia/Fandom wikis that offered a more user-friendly alternative to the existing user talk pages. From the abstract:

Presentation about a paper titled "Tor Users Contributing to Wikipedia: Just Like Everybody Else?", an analysis of the quality of edits that slipped through Wikipedia's general block of the Tor anonymizing tool. From the abstract:

See also our coverage of a related paper by some of the same authors: "Privacy, anonymity, and perceived risk in open collaboration: a study of Tor users and Wikipedians"

Discussion summarization tool to help with Requests for Comments (RfCs) going stale

"Supporting deliberation and resolution on Wikipedia" - presentation about the "Wikum" online tool for summarizing large discussion threads and a related paper, quote:

The research was presented in 2018 at the CSCW conference and at the Wikimedia Research Showcase. See also press release: "Why some Wikipedia disputes go unresolved. Study identifies reasons for unsettled editing disagreements and offers predictive tools that could improve deliberation.", dataset, and our previous coverage: "Wikum: bridging discussion forums and wikis using recursive summarization".

See our 2016 review of the underlying paper: "A new algorithmic tool for analyzing rationales on articles for deletion" and related coverage

Presentation about ongoing survey research by the Wikimedia Foundation focusing on reader demographics, e.g. finding that the majority of readers of "non-colonial" language versions of Wikipedia are monolingual native speakers (i.e. don't understand English).

Wikipedia citations (footnotes) are only clicked on one of every 200 pageviews

A presentation about an ongoing project to analyze the usage of citations on Wikipedia highlighted this result among others.

See last month's OpenSym coverage about the same research.

About the "Wikipedia Insights" tool for studying Wikipedia pageviews, see also our earlier mention of an underlying paper.

Harmful content rare on English Wikipedia

The presentation "Understanding content moderation on English Wikipedia" by researchers from Harvard University's Berkman Klein Center reported on an ongoing project, finding e.g. that only about 0.2% of revisions contain harmful content, and concluding that "English Wikipedia seems to be doing a pretty good job [removing harmful content - but:] Folks on the receiving end probably don't feel that way."

Presentation about "on-going work on English Wikipedia to assist checkusers to efficiently surface sockpuppet accounts using machine learning" (see also research project page)

Demonstration of "Wiki Atlas, [...] a web platform that enables the exploration of Wikipedia content in a manner that explicitly links geography and knowledge", and a (prototype) augmented reality app that shows Wikipedia articles about e.g. buildings.

Presentation of ongoing research detecting subject matter experts among Wikipedia contributors using machine learning. Among the findings: Subject matter experts concentrate their activity within a topic area, focusing on adding content and referencing external sources. Their edits persist 3.5 times longer than those of other editors. In an analysis of 300,000 editors, 14-32% were classified as subject matter experts.

Why Apple's Siri relies on data from Wikipedia infoboxes instead of (just) Wikidata

The presentation "Improving Knowledge Base Construction from Robust Infobox Extraction" about a paper already highlighted in our July issue explained a method used to ingest facts from Wikipedia infoboxes into the knowledge base underlying Apple's Siri question answering system. The speaker noted the decision not to rely solely on Wikidata for this purpose, because Wikipedia still offers richer information than Wikidata - especially on less popular topics. An audience member asked what Apple might be able to give back to the Wikimedia community from this work on extracting and processing knowledge for Siri. The presenter responded that publishing this research was already the first step, and more would depend on support from higher-ups at the company.

From the abstract of the underlying paper:

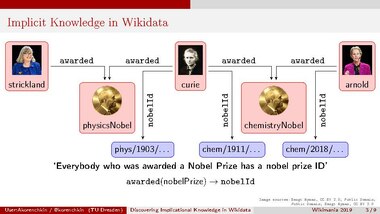

"A distinguishing feature of Wikidata [among other knowledge graphs such as Google's "Knowledge Graph" or DBpedia] is that the knowledge is collaboratively edited and curated. While this greatly enhances the scope of Wikidata, it also makes it impossible for a single individual to grasp complex connections between properties or understand the global impact of edits in the graph. We apply Formal Concept Analysis to efficiently identify comprehensible implications that are implicitly present in the data. [...] We demonstrate the practical feasibility of our approach through several experiments and show that the results may lead to the discovery of interesting implicational knowledge. Besides providing a method for obtaining large real-world data sets for FCA, we sketch potential applications in offering semantic assistance for editing and curating Wikidata."

See last month's OpenSym coverage about the same research

The now traditional annual overview of scholarship and academic research on Wikipedia and other Wikimedia projects from the past year (building on this research newsletter). Topic areas this year included the gender gap, readability, article quality, and measuring the impact of Wikimedia projects on the world. Presentation slides

Other events

See the the page of the monthly Wikimedia Research Showcase for videos and slides of past presentations.

Other recent publications

Other recent publications that could not be covered in time for this issue include the items listed below. Contributions, whether reviewing or summarizing newly published research, are always welcome.

- Compiled by Tilman Bayer and Miriam Redi

"Revealing the Role of User Moods in Struggling Search Tasks"

In search tasks in Wikipedia, people who are in unpleasant moods tend to issue more queries and perceive higher level of difficulty than people in neutral moods.

Helping students find a research advisor, with Google Scholar and Wikipedia

This paper, titled "Building a Knowledge Graph for Recommending Experts", describes a method to build a knowledge graph by integrating data from Google Scholar and Wikipedia to help students find a research advisor or thesis committee member.

"Uncovering the Semantics of Wikipedia Categories"

From the abstract:

"Adapting NMT to caption translation in Wikimedia Commons for low-resource languages"

This paper describes a system to generate Spanish-Basque and English-Irish translations for image captions in Wikimedia Commons.

"Automatic Detection of Online Abuse and Analysis of Problematic Users in Wikipedia"

About an abuse detection model that leverages Natural Language Processing techniques, reaching an accuracy of ∼85%. (see also research project page on Meta-wiki, university page: "Of Trolls and Troublemakers", research showcase presentation)

"Self Attentive Edit Quality Prediction in Wikipedia"

A method to infer edit quality directly from the edit's textual content using deep encoders, and a novel dataset containing ∼ 21M revisions across 32K Wikipedia pages.

"TableNet: An Approach for Determining Fine-grained Relations for Wikipedia Tables"

From the abstract:

"Training and hackathon on building biodiversity knowledge graphs" with Wikidata

From the abstract and conclusions:

"Spectral Clustering Wikipedia Keyword-Based Search Results"

From the abstract:

"Indigenous Knowledge for Wikipedia: A Case Study with an OvaHerero Community in Eastern Namibia"

From the abstract:

"On Persuading an OvaHerero Community to Join the Wikipedia Community"

From the abstract:

Discuss this story

Regarding the one in every 200 pageviews leading to a citation ref click, that's surprisingly low given the editor-side emphasis on WP:RS. It would be good to see more students being explicitly taught information literacy and best practices specifically for reading Wikipedia. The nearest reader-side resources I know of within Wikipedia are Help:Wikipedia:_The_Missing_Manual/Appendixes/Reader's_guide_to_Wikipedia and Wikipedia:Research_help don't really cover the relevant topics e.g. why and how to check the references. T.Shafee(Evo&Evo)talk 01:33, 1 November 2019 (UTC)[reply]

"All Talk" flaws

This study may have measured the wrong things. The goal was assumed to be "productivity" as defined by article contributions, but there was no theoretical justification given for this decision. Currently, the best model for what we want to see happen for newcomers is that their first edits aren't reverted, and that the editors stick around and stay active for a long time. See meta:Research:New_editors'_first_session_and_retention. Adamw (talk) 13:39, 5 November 2019 (UTC)[reply]

Characterizing Reader Behavior on Wikipedia

Anyone else notice the striking statistic that over 1/3rd of English Wikipedia readers are not native English speakers? I'm a native English speaker and have a hard time reading a lot of our articles (due to the high prevalence of jargon, overly complex run-on sentences, and mangled grammar). Perhaps we should make more of an effort to make our articles readable rather than trying (poorly) to make them sound academic and erudite. Kaldari (talk) 15:30, 10 November 2019 (UTC)[reply]

Image for Wikidata item

Is there a particular point in using an image that shows only women to illustrate the Wikidata item about knowledge graphs? I don't want to make any assumptions here, other than that it seems obvious that someone (singular or plural) made the decision and had reasons for it. – Athaenara ✉ 19:15, 26 November 2019 (UTC)[reply]