Wikipedia:Wikipedia Signpost/2023-07-17/Recent research

Wikipedia-grounded chatbot "outperforms all baselines" on factual accuracy

A monthly overview of recent academic research about Wikipedia and other Wikimedia projects, also published as the Wikimedia Research Newsletter.

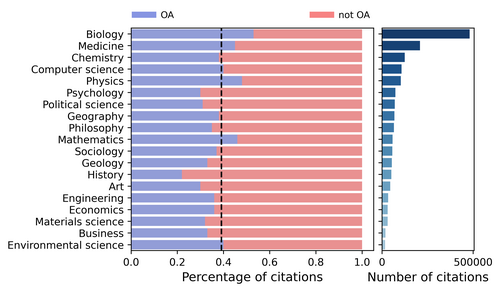

Wikipedia and open access

- Reviewed by Nicolas Jullien

From the abstract::

Why does it matter for the Wikipedia community?

This article is a first draft of an analysis of the relationship between the availability of a scientific journal as open access and the fact that it is cited in the English Wikipedia (note: although it speaks of "Wikipedia", the article looks only at the English pages). It is a preprint and has not been peer-reviewed, so its results should be read with caution, especially since I am not sure about the robustness of the model and the results derived from it (see below). It is of course a very important issue, as access to scientific sources is key to the diffusion of scientific knowledge, but also, as the authors mention, because Wikipedia is seen as central to the diffusion of scientific facts (and is sometimes used by scientists to push their ideas).

Review

The results presented in the article (and its abstract) highlight two important issues for Wikipedia that will likely be addressed in a more complete version of the paper:

- The question of the reliability of the sources used by Wikipedians

- → The regressions seem to indicate that the reputation of the journal is not important to be cited in Wikipedia.

- → Predatory journals are known to be more often open access than classical journals, which means that this result potentially indicates that the phenomenon of open access reduces the seriousness of Wikipedia sources.

The authors say on p. 4 that they provided "each journal with an SJR score, H-index, and other relevant information." Why did they not use this as a control variable? (this echoes a debate on the role of Wikipedia: is it to disseminate verified knowledge, or to serve as a platform for the dissemination of new theories? The authors seem to lean towards the second view: p. 2: "With the rapid development of the Internet, traditional peer review and journal publication can no longer meet the need for the development of new ideas".)

- The solidity of the paper's conclusions

- The authors said: "STEM fields, especially biology and medicine, comprise the most prominent scientific topics in Wikipedia [17]." "General science, technology, and biomedical research have relatively higher OA rates."

- → So, it is obvious that, on average, there are more citations of Open Access articles in Wikipedia (than in the entire available research corpus), and explain that open access articles are cited more.

- → Why not control for academic discipline in the models?

More problematic (and acknowledged by the authors, so probably in the process of being addressed), the authors said, on p.7, that they built their model with the assumption that the age of a research article and the number of citations it has both influence the probability of an article being cited in Wikipedia. Of course, for this causal effect to hold, the age and the number of citations must be taken into account at the moment the article is cited in Wikipedia. For example, if some of the citations are made after the citation in Wikipedia, one could argue that the causal effect could be in the other direction. Also, many articles are open access after an embargo period, and are therefore considered open access in the analysis, whereas they may have been cited in Wikipedia when they were under embargo. The authors did not check for this, as acknowledged in the last sentence of the article. Would their result hold if they do their model taking the first citation in the English Wikipedia, for example, and the age of the article, its open access status, etc. at that moment?

In short

Although this first draft is probably not solid enough to be cited in Wikipedia, it signals important research in progress, and I am sure that the richness of the data and the quality of the team will quickly lead to very interesting insights for the Wikipedia community.

Related earlier coverage

- "Quantifying Engagement with Citations on Wikipedia" (about a 2020 paper that among other results found that "open access sources [...] are particularly popular" with readers)

- "English Wikipedia lacking in open access references" (2022)

"Controversies over Historical Revisionism in Wikipedia"

- Reviewed by Andreas Kolbe

From the abstract:

This brief study, one of the extended abstracts accepted at the Wiki Workshop (10th edition), follows up on reports that some historical pages on the Japanese Wikipedia, particularly those related to World War II and war crimes, have been edited in ways that reflect radical right-wing ideas (see previous Signpost coverage). It sets out to answer three questions:

- What types of historical topics are most susceptible to historical revisionism?

- What are the common factors for the historical topics that are subject to revisionism?

- Are there groups of editors who are seeking to disseminate revisionist narratives?

The study focuses on the level of controversy of historical articles, based on the notion that the introduction of revisionism is likely to lead to edit wars. The authors found that the most controversial historical articles in the Japanese Wikipedia were indeed focused on areas that are of particular interest to revisionists. From the findings:

The paper establishes that articles covering these topic areas in the Japanese Wikipedia are contested and subject to edit wars. However, it does not measure to what extent article content has been compromised. Edit wars could be a sign of mainstream editors pushing back against revisionists, while conversely an absence of edit wars could indicate that a project has been captured (cf. the Croatian Wikipedia). While this little paper is a useful start, further research on the Japanese Wikipedia seems warranted.

See also our earlier coverage of a related paper: "Wikimedia Foundation builds 'Knowledge Integrity Risk Observatory' to enable communities to monitor at-risk Wikipedias"

Wikipedia-based LLM chatbot "outperforms all baselines" regarding factual accuracy

- Reviewed by Tilman Bayer

This preprint (by three graduate students at Stanford University's computer science department and Monica S. Lam as fourth author) discusses the construction of a Wikipedia-based chatbot:

The paper sets out from the observation that

The researchers argue that "most chatbots are evaluated only on static crowdsourced benchmarks like Wizard of Wikipedia (Dinan et al., 2019) and Wizard of Internet (Komeili et al., 2022). Even when human evaluation is used, evaluation is conducted only on familiar discussion topics. This leads to an overestimation of the capabilities of chatbots." They call such topics "head topics" ("Examples include Albert Einstein or FC Barcelona"). In contrast, the lesser known "tail topics [are] likely to be present in the pre-training data of LLMs at low frequency. Examples include Thomas Percy Hilditch or Hell's Kitchen Suomi". As a third category, they consider "recent topics" ("topics that happened in 2023, and therefore are absent from the pre-training corpus of LLMs, even though some background information about them could be present. Examples include Spare (memoir) or 2023 Australian Open"). The latter are obtained from a list of most edited Wikipedia articles in early 2023.

Regarding the "core verification problem [...] whether a claim is backed up by the retrieved paragraphs [the researchers] found that there is a significant gap between LLMs (even GPT-4) and human performance [...]. Therefore, we conduct human evaluation via crowdsourcing, to classify each claim as supported, refuted, or [not having] enough information." (This observation may be of interest regarding efforts to use LLMs as a tools for Wikipedians to check the integrity of citations on Wikipedia. See also the "WiCE" paper below.)

In contrast, the evalution for "conversationality" is conducted "with simulated users using LLMs. LLMs are good at simulating users: they have the general familiarity with world knowledge and know how users behave socially. They are free to occasionally hallucinate, make mistakes, and repeat or even contradict themselves, as human users sometimes do."

In the paper's evaluation, WikiChat impressively outperforms the two comparison baselines in all three topic areas (even the well-known "head" topics). It may be worth noting though that the comparison did not include widely used chatbots such as ChatGPT or Bing AI. Instead, the authors chose to compare their chatbot with Atlas (describing it as based on a retrieval-augmented language model that is "state-of-the-art [...] on the KILT benchmark") and GPT-3.5 (while ChatGPT is or has been based on GPT-3.5 too, it involved extensive additional finetuning by humans).

Briefly

- Compiled by Tilman Bayer

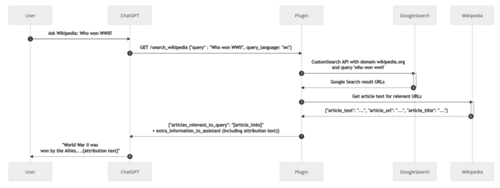

Wikimedia Foundation launches experimental ChatGPT plugin for Wikipedia

As part of an effort "to understand how Wikimedia can become the essential infrastructure of free knowledge in a possible future state where AI transforms knowledge search", on July 13 the Wikimedia Foundation announced a new Wikipedia-based plugin for ChatGPT. (Such third-party plugins are currently available to all subscribers of ChatGPT Plus, OpenAI's paid variant of their chatbot; the Wikipedia plugin's code itself is available as open source.) The Foundation describes it as an experiment designed answer research questions such as "whether users of AI assistants like ChatGPT are interested in getting summaries of verifiable knowledge from Wikipedia".

The plugin works by first performing a Google site search on Wikipedia to find articles matching the user's query, and then passing the first few paragraphs of each article's text to ChatGPT, together with additional (hidden) instruction prompts on how the assistant should use them to generate an answer for the user (e.g. "In ALL responses, Assistant MUST always link to the Wikipedia articles used").

Wikimedia Foundation Research report

The Wikimedia Foundation's Research department has published its biannual activity report, covering the work of the department's 10 staff members as well as its contractors and formal collaborators during the first half of 2023.

New per-country pageview dataset

The Wikimedia Foundation announced the public release of "almost 8 years of pageview data, partitioned by country, project, and page", sanitized using differential privacy to protect sensitive information. See documentation

Wikimedia Research Showcase

See the page of the monthly Wikimedia Research Showcase for videos and slides of past presentations.

Other recent publications

Other recent publications that could not be covered in time for this issue include the items listed below. Contributions, whether reviewing or summarizing newly published research, are always welcome.

- Compiled by Tilman Bayer

Prompting ChatGPT to answer according to Wikipedia reduces hallucinations

From the abstract:

The authors tested various variations of such "grounding prompts" (e.g. "As an expert editor for Wikipedia, I am confident in the following answer." or "I found some results for that on Wikipedia. Here’s a direct quote:"). The best performing prompt was "Respond to this question using only information that can be attributed to Wikipedia".

"Citations as Queries: Source Attribution Using Language Models as Rerankers"

From the abstract:

"WiCE: Real-World Entailment for Claims in Wikipedia"

From the abstract:

The preprint gives the following examples of such an automatic decomposition performed by GPT-3 (using the prompt "Segment the following sentence into individual facts:" accompanied by several instructional examples):

"SWiPE: A Dataset for Document-Level Simplification of Wikipedia Pages"

From the abstract:

"Descartes: Generating Short Descriptions of Wikipedia Articles"

From the abstract:

"WikiDes: A Wikipedia-based dataset for generating short descriptions from paragraphs"

From the abstract:

From the introduction:

See also the "Descartes" paper (above).

"Can Language Models Identify Wikipedia Articles with Readability and Style Issues?"

From the abstract:

"Wikibio: a Semantic Resource for the Intersectional Analysis of Biographical Events"

From the abstract:

"Detecting Cross-Lingual Information Gaps in Wikipedia"

From the abstract:

From the paper:

"Wikidata: The Making Of"

From the abstract:

"Mining the History Sections of Wikipedia Articles on Science and Technology"

From the abstract:

Discuss this story

Presumably the preprint about WiCE, after giving the example quoted above, goes on to discuss the problems with both the sentence from the article Santa Maria della Pietà, Prato ("13th-century icon" is not supported by the source) and the "sub-claims" GPT-3 generated from it (clearly the "icon" can't be both 13th-century and from 1638)? If so, what does it say? I think the original source has misunderstood that the 14th-century image itself (attributed to Giovanni Bonsi), as opposed to a "depiction of the miraculous event" (unspecified, but it occurred in the 17th century), is the fresco at the centre of the later altarpiece (painted by Mario Balassi in 1638, and on canvas rather than in fresco according to the Italian Wikipedia article), so that doesn't help. Ham II (talk) 11:23, 17 July 2023 (UTC)[reply]

Thanks and small correction on Wikipedia ChatGPT plugin

Thanks for covering this work! One small correction RE:

This was true of the earliest version of the plugin, but for production we've switched to leveraging the Wikimedia Search API to find articles matching the user's query. We'll update the docs/README to reflect this (our quick R&D on this outpaced our technical documentation, but catching up now)! Maryana (WMF) (talk) 22:10, 17 July 2023 (UTC)[reply]

"google_search_is_enabled"), but that the latter is selected as the preferred search provider right now in the settings. (Feel free to correct me as I may have misread the code.)